areas where the surface curves much more steeply in one dimension than in another, which are common around local optima. second-order methods such as Newton's method.

We will not discuss algorithms that are infeasible to compute in practice for high-dimensional data sets, e.g. In the following, we will outline some algorithms that are widely used by the deep learning community to deal with the aforementioned challenges. These saddle points are usually surrounded by a plateau of the same error, which makes it notoriously hard for SGD to escape, as the gradient is close to zero in all dimensions. points where one dimension slopes up and another slopes down. argue that the difficulty arises in fact not from local minima but from saddle points, i.e.

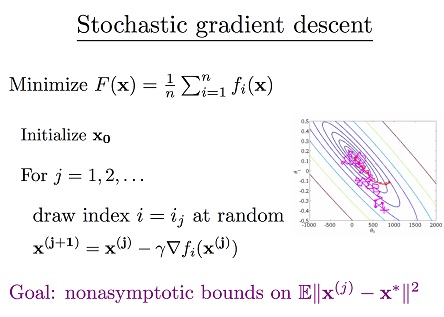

#Stochastic gradient descent update#

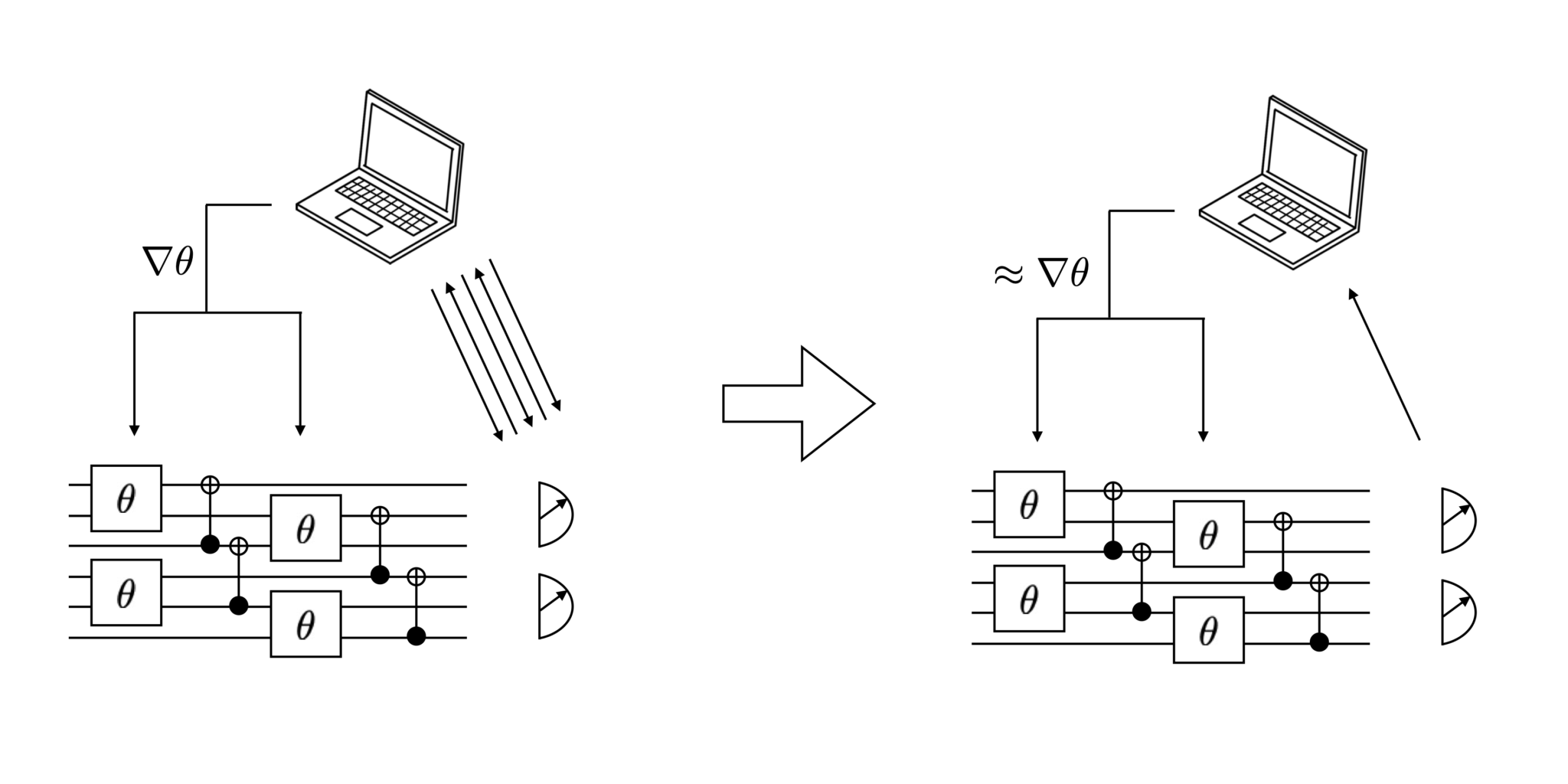

If our data is sparse and our features have very different frequencies, we might not want to update all of them to the same extent, but perform a larger update for rarely occurring features.Īnother key challenge of minimizing highly non-convex error functions common for neural networks is avoiding getting trapped in their numerous suboptimal local minima. Īdditionally, the same learning rate applies to all parameter updates. These schedules and thresholds, however, have to be defined in advance and are thus unable to adapt to a dataset's characteristics. reducing the learning rate according to a pre-defined schedule or when the change in objective between epochs falls below a threshold. Learning rate schedules try to adjust the learning rate during training by e.g. A learning rate that is too small leads to painfully slow convergence, while a learning rate that is too large can hinder convergence and cause the loss function to fluctuate around the minimum or even to diverge. Vanilla mini-batch gradient descent, however, does not guarantee good convergence, but offers a few challenges that need to be addressed:Ĭhoosing a proper learning rate can be difficult. Params = params - learning_rate * params_grad Challenges Params_grad = evaluate_gradient(loss_function, batch, params) In code, instead of iterating over examples, we now iterate over mini-batches of size 50: for i in range(nb_epochs):įor batch in get_batches(data, batch_size=50): Gradient descent is a way to minimize an objective function \(J(\theta)\) parameterized by a model's parameters \(\theta \in \mathbb\) for simplicity. Finally, we will consider additional strategies that are helpful for optimizing gradient descent. We will also take a short look at algorithms and architectures to optimize gradient descent in a parallel and distributed setting. Subsequently, we will introduce the most common optimization algorithms by showing their motivation to resolve these challenges and how this leads to the derivation of their update rules. We will then briefly summarize challenges during training. We are first going to look at the different variants of gradient descent. This blog post aims at providing you with intuitions towards the behaviour of different algorithms for optimizing gradient descent that will help you put them to use. These algorithms, however, are often used as black-box optimizers, as practical explanations of their strengths and weaknesses are hard to come by. lasagne's, caffe's, and keras' documentation). At the same time, every state-of-the-art Deep Learning library contains implementations of various algorithms to optimize gradient descent (e.g. Gradient descent is one of the most popular algorithms to perform optimization and by far the most common way to optimize neural networks. Additional strategies for optimizing SGD.Gradient descent optimization algorithms.The discussion provides some interesting pointers to related work and other techniques. Update 21.06.16: This post was posted to Hacker News. Update : Added derivations of AdaMax and Nadam. Update : Most of the content in this article is now also available as slides. Update : Added a note on recent optimizers. Note: If you are looking for a review paper, this blog post is also available as an article on arXiv. This post explores how many of the most popular gradient-based optimization algorithms actually work.

0 kommentar(er)

0 kommentar(er)